Hacked By Sipahiler

Hacked By Sipahiler

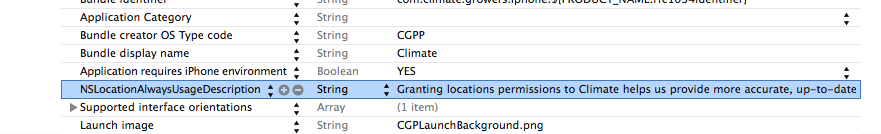

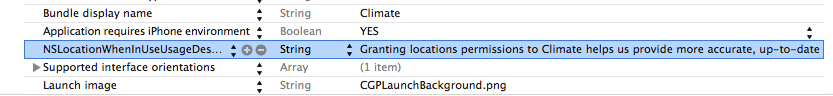

iOS 8 has changed how location services are handled. First, there are two levels of location monitoring now allowed. From the Apple Docs on CLLocationManager:

Previously, apps could ask the user for authorization by starting to use location services in your app:

self.locationManager = [[CLLocationManager alloc] init]; // This should open an alert that prompts the user, but in iOS 8 it doesn't! [self.locationManager startUpdatingLocation];

Now, it does nothing.

Here are the steps to getting your app back to its former glory:

// iOS 8 - request location services via requestWhenInUseAuthorization.

if ([self.locationManager respondsToSelector:@selector(requestWhenInUseAuthorization)]) {

[self.locationManager requestWhenInUseAuthorization];

} else {

// iOS 7 - We can't use requestWhenInUseAuthorization -- we'll get an unknown selector crash!

// Instead, you just start updating location, and the OS will take care of prompting the user

// for permissions.

[self.locationManager startUpdatingLocation];

}

- (void)locationManager:(CLLocationManager *)manager didChangeAuthorizationStatus:(CLAuthorizationStatus)status

{

// We only need to start updating location for iOS 8 -- iOS 7 users should have already

// started getting location updates

if (status == kCLAuthorizationStatusAuthorizedAlways ||

status == kCLAuthorizationStatusAuthorizedWhenInUse) {

[manager startUpdatingLocation];

}

}

The workflow works like this:

http://blog.jerodsanto.net/2010/12/bridging-the-gap-between-javascripts-console-log-and-cocoas-nslog/

Man, this is a cool thing.

In Objective-C, a subclasses can override its superclass’s private methods. If you accidentally name a method the same as something in its parent, it will obscure it. This is a very bad thing.

As of this Monday, I work at The Climate Corporation doing iOS development!

The past few days, I’ve achieved the perfect blend of productivity and enjoyment. Lots of programming, lots of different projects, lots of things I’m interested in, it’s all great fun.

The one killer feature I had in mind with ColorMyWorld was to have it do real-time color analysis: point the video camera at an object, grab a sample swatch from the video frame, find its average color, and display the closest matching color. I never implemented this because the app seemed good enough without it, but the absence of this killer feature gnawed at my soul.

Armed with a few more months of iOS/Obj-C experience, I’ve finally circled back to ColorMyWorld to give it the attention it deserves. It’s been given a big code refactoring, but more importantly, I’ve taken a swing at implementing real-time analysis. That means working with the AVFoundation framework.

It was encouraging to see how quickly I was able to finish the first iteration of the feature. It took me a few hours to get the closest color to print to the debug console, and another few hours to get the camera to display on the screen and to allow the user to lock and unlock the sampling mode by holding their finger to the screen.

At this point, everything was looking pretty darn functional, but there was a problem I expected: rotating the device really messed things up. I expected the camera to rotate when I rotate the device. It didn’t.

To explain what happened, I’ll have to explain what I know about AVFoundation.

With AVFoundation, you set up a session to capture input from the device’s camera or the microphone (AVCaptureSession, AVCaptureDevice, and AVCaptureDeviceInput). You then set up the session with one or more ways to output the captured data (AVCaptureOutput, AVCaptureVideoDataOutput, etc.). The session then uses connections (AVCaptureConnection) to send data from the inputs to its outputs.

To display what the camera sees, you have to use a preview layer. A preview layer is a CALayer subclass that displays the video that the camera input is capturing. You basically create a preview layer, add it as a sublayer to a view, and set it to receive data from the capture session. Pretty basic stuff, only a few lines of code.

The problem is that rotating the view in which the preview layer exists does not rotate the preview layer. There is a deprecated way of rotating the preview layer, but the Apple-approved method is to grab the connection between the session and the preview layer and set its videoOrientation property. Okay, so in your view controller’s viewDidLayoutSubviews method, have it access the preview layer’s connection to its session and set this to the UIDevice’s orientation.

But I discovered something else that was weird: the image output from the camera was oriented differently than it was in the preview layer. My mind was boggled. Why is this happening? I already set the connection to the correct orientation!

Well, there’s a very good explanation for why this is: your AVCaptureSession captures raw data from the camera, but it has a few different ways of outputting that data. One way is to render that data in the preview layer, and another way is to send the data to a buffer that you can read and transform into UIImage instances.

These two things use different connections! That’s why setting the preview layer’s connection to orient the image did not change the image output’s orientation. To do that, you have to access the video data output, get its connection to the session, and change its videoOrientation as well.

As an update to this post, I wasn’t able to sneak the GhettoDoro app by the App Store review process. I intentionally left the UI just about as rough and barebones as possible because the idea of getting Apple to publish an intentionally bad-looking app of mine would be funny. I think this is mostly The Smart-Ass 8th Grade Version of Me that thinks things like having an app named GhettoDoro is glorious.

After getting totally rejected by Apple, I decided to take GhettoDoro and use it as a platform to learn about unit testing and TDD in the Cocoa and Objective-C world, namely the OCMock and XCTest frameworks. It was pleasant using TDD to drive the process of design and refactoring — the timer logic is now in a model class so that the view controller can stay unpolluted.

I would have been perfectly content to leave this project unreleased, but after a week, I found myself using GhettoDoro more and more often. It’s actually useful! That meant it was worth polishing up and having its interface be more than just a juvenile punchline.

One thing that stuck out at me while reviewing the iOS 7 UI design recommendations from Apple is that they champion a full-screen design modality where the entire screen is used to express information. Their Weather app is a great example of this. I wanted to take a swing at an interface like that as well.

My thinking was that the user should be able to look at the screen and tell how much time they had left without actually looking at the time left. What I came up with was to have the background turn from green to red as it ticks down. Green and red are universally known as stop/go — hilarious to a colorblind person like myself — and it also fits in with the theme of tomatoes in the Pomodoro Technique, like how a green tomato is fresh and a red tomato is ripe.

One complication to implementing this feature was that the color needed to be based on the proportion of the time left vs. how much time was added to the timer. For example, if you start a 5-minute timer, it should be just as green as starting a 1-hour timer. And if a timer is almost red, adding 5 minutes to it should not push it back to full green. That meant that the color had to be calculated with a ratio multiplier, where the ratio is based on the current time left and the total time added to this timer.

There’s also a lot of behind-the-curtains trickery involved to support background notifications and pausing/resuming the timer. It’s was a bit challenging in the sense that there are a lot more potential cases to have to consider. The timer can be started/stopped/paused/resumed, but the app can be in the foreground or the background as well. And when it’s paused and and started again, does the notification get rescheduled to the new timer end date? That sort of stuff.

These different use cases and scenarios can be tough, but taking a TDD approach helped immensely. It allowed me to really focus on the app requirements, while writing the production code was almost a secondary concern. The beauty of TDD is that it lets you really build your system to do what you want it to do.

As I am finding out in a current project however, there’s one exception where it makes sense to drop TDD, and that’s during a spike when you need to explore a problem that you don’t have enough experience to know how to design, approach, or solve. You can’t write a test to specify what your code should do if you just don’t know what it should do. Even if you think you know what it should roughly do from a black-box, outside-in, functionality perspective, your initial assumptions about your code’s API and its expected behavior is likely to change.

I feel like a good way to tell if you should drop TDD temporarily is if you’re writing a unit test and you say to yourself, “I am pretty sure I will need to drastically rewrite this test.” In that case, there’s no point writing something you’re likely to junk entirely. Of course, the rub is that you have to circle back and make sure you write that unit test afterwards.

And as you can see, I’ve been working with iOS unit testing frameworks XCTest and OCMock.

It does feel a little bit like unit testing is a second-class citizen in iOS, at least compared to Rails. Or maybe I’m trippin’.

I don’t know. I have consciously chosen not to have too entrenched of an opinion about programming topics. There are one million ways to skin a cat, and each way has its pros and cons.

I am a believer in the Pomodoro Technique, a very smart productivity hack to structure your work efforts in 25-minute sprints, punctuated by 5-minute breaks.

Lately, I’ve been using a mechanical Ikea timer, and I hate it. It makes an awful ticking sound, and its alarm (when it actually manages to work) is jarring. I considered using the iOS 7 built-in timer, but switching between 5- and 25-minute increments is clumsy. All I need for a timer is to start/stop/pause/resume it, and to add in 5-minute increments. Is that so hard?

I know there are probably some very good Pomodoro timer apps out there, but I thought building one myself would be a good way to learn more about how to use timers in iOS.

So I built one myself. And I made it revel in all of its brain-dead-simple glory. It’s so simple that I christened it GhettoDoro. I even ripped the Windows 3.1 startup sound to use for the alert. I made the app icon the ugliest tomato (pomodoro) you’ve ever seen.

And I submitted it to the App Store, and I totally expect it to be rejected! There are tons of timer apps out there, and mine is definitely way uglier, but damnit, I’m going to use this thing, and I’m going to enjoy it.

And in case you were wondering: yes, I learned a lot of good things from this short project.

I know how to create an NSTimer. I know how to schedule it on the run loop to repeat. I know how to invalidate it to stop it from firing again.

I know how to set up an UILocalNotification, which is the class you use when you need to have your app display a notification to the user (banner or alert style). I know how to register it with UIApplication to run at a specific time (fireDate), and how to cancel a scheduled notification (cancelLocalNotification).

I learned a lot about working with time intervals.

And I made my first dalliance into the AVFramework by using the C API to play the alert sound and vibrate.

And I wrestled mightily with the Apple Developer certificate signing and device provisioning, and I have a much better sense of how it works.

So all in all, my ghetto Pomodoro timer app was a rousing success!